Character.AI has recently rolled out significant safety updates in response to growing concerns about the potential risks its chatbot platform poses for young users. The changes come amidst mounting scrutiny, including two high-profile lawsuits accusing the service of contributing to mental health struggles, such as self-harm and suicidal ideation. In a bid to ensure user safety, Character.AI has developed specialized models and implemented parental controls, targeting both risky behaviors and sensitive content.

Enhanced Safety with Dedicated Teen LLMs

One of the most notable changes is the introduction of a distinct Large Language Model (LLM) tailored exclusively for users under the age of 18. This teen-focused AI version places stricter restrictions on responses, especially when it comes to romantic themes or sensitive prompts. The goal is to ensure that the chatbot avoids content that may lead to suggestive, harmful, or inappropriate exchanges with minors.

These new AI models are equipped with advanced filters that can detect user attempts to provoke certain responses—such as those linked to suicide, mental health crises, or self-harm discussions. When such sensitive topics arise, users will now receive a pop-up directing them to the National Suicide Prevention Lifeline. This initiative builds on previously reported attempts to proactively support users facing mental health struggles.

Character.AI has also disabled the ability for minors to rewrite or edit responses, a feature that could otherwise be used to manipulate content or bypass the platform’s safeguards.

Parental Controls and Monitoring Tools

Parental controls are a major part of Character.AI’s commitment to creating a safer online space. Scheduled to launch in the first quarter of next year, these controls will allow parents to monitor their child’s activity on the platform. Parents will gain insights into how much time their child is spending with the service and identify the bots their child interacts with most frequently.

These measures aim to empower parents with data while providing peace of mind about their children’s interactions on the AI platform. The parental controls were developed in consultation with ConnectSafely, a well-known organization specializing in teen online safety, ensuring the changes are both effective and grounded in expert advice.

Addressing Concerns: Addiction, Misleading Prompts, and Clarity

Character.AI is also addressing other common concerns, such as the addictive nature of AI bots and the potential confusion about whether bots are real people. To combat these issues:

- Users will now receive notifications after spending an hour-long session with a bot to help limit compulsive interaction.

- The previous disclaimer—simply stating that “everything characters say is made up”—has been replaced with clearer, more detailed language.

- Bots that claim to simulate roles such as therapists or doctors now include additional disclaimers to clarify that they are not licensed professionals and cannot provide medical, psychological, or other professional advice.

For example, when interacting with bots simulating therapeutic roles, users will see a visible warning stating, “This is not a real person or licensed professional. Nothing said here is a substitute for professional advice, diagnosis, or treatment.”

These changes are part of a broader effort to address feedback from user complaints and lawsuits that raised concerns about young people becoming emotionally attached to AI bots and engaging in self-destructive or harmful behaviors during these conversations.

Collaboration with Safety Experts

The suite of updates was developed in partnership with several teen online safety experts, signaling Character.AI’s commitment to evolving its policies in line with best practices. According to Character.AI, the changes are designed to strike the right balance between creativity, exploration, and safety—creating a space where users can engage with AI bots while minimizing risks.

“We recognize that our approach to safety must evolve alongside the technology that drives our product — creating a platform where creativity and exploration can thrive without compromising safety,” says the company in its latest press release.

A Technology and Policy Shift for the Future

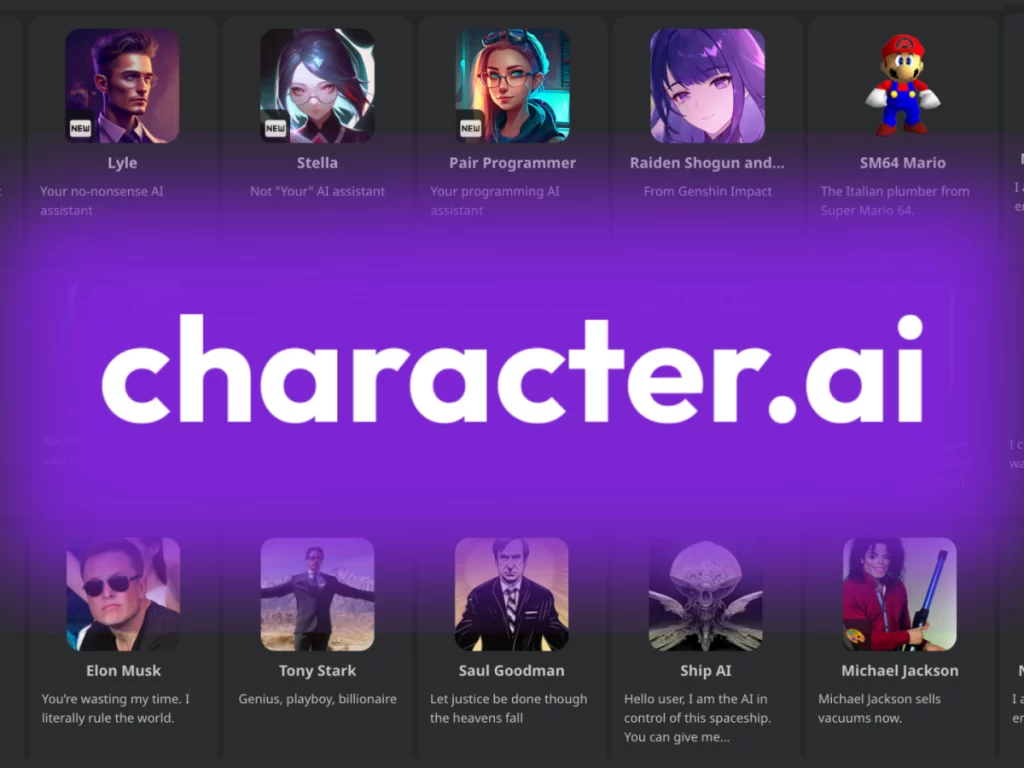

Character.AI has positioned itself as a leader in AI chatbot innovation, offering users the ability to interact with custom bots ranging from fictional characters to life coaches. However, these recent changes signify an important shift as the company acknowledges the risks associated with its platform.

The lawsuits and scrutiny have brought to light a need for innovation not just in AI technology but also in how tech companies approach responsibility, user safety, and mental health crises. With these new measures, Character.AI is making it clear that it takes these responsibilities seriously while trying to maintain the creative freedom its users enjoy.

These adjustments reflect a multi-layered approach to addressing complex issues surrounding technology use among teenagers. As AI continues to grow in influence, Character.AI’s proactive steps could serve as a roadmap for other platforms grappling with similar challenges.

As these updates take effect, it will be critical to monitor how well they address the concerns they aim to resolve. For now, these changes are a promising step toward making AI chatbot experiences safer for younger audiences while empowering parents and users with better tools for oversight.