As concerns mount over the safety and privacy of young users on social media, Meta is taking significant steps to enhance protections on Instagram. The company has announced a suite of new features specifically designed to address the growing problem of sextortion scams targeting teenagers. This initiative comes amid increasing scrutiny and pressure from lawmakers and advocacy groups regarding how social media platforms manage the safety of younger users.

Targeting Potential Scammers

In its latest update, Meta is focusing on making it more difficult for potentially malicious accounts to reach out to teens. Instagram will now automatically redirect follow requests from suspicious accounts to users’ spam folders, or block them altogether. This proactive measure aims to minimize the chances of scammers making contact with vulnerable young people.

In addition, Instagram is testing a new alert system that will notify teenagers when they receive messages from accounts that are flagged as suspicious. This feature will provide a warning indicating that the message appears to originate from a different country, an indication that it may not be from someone the user knows. This added layer of security is designed to make teens more aware of potential dangers lurking in their DMs.

Moreover, if Instagram detects that a potential scammer is already following a teen, the platform will restrict the scammer’s access to the teen’s follower lists and any accounts that have tagged them in photos. While Meta has not disclosed the exact criteria for identifying these “potentially scammy” accounts, it has indicated that factors like account age and mutual connections will play a role in this determination.

Combating the Spread of Intimate Images

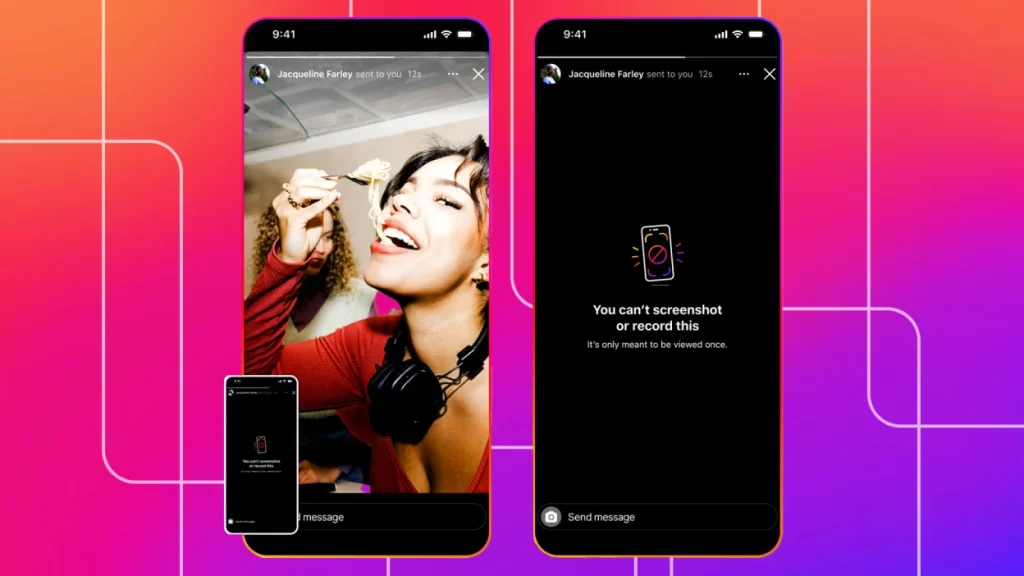

Instagram is also implementing stricter measures to curb the distribution of intimate images shared via its direct messaging feature. Users will no longer be able to take screenshots or screen recordings of images sent through Instagram’s ephemeral messaging system. Additionally, these types of images will not be accessible through the web version of the platform, further enhancing privacy.

A significant expansion of Instagram’s nudity protection feature is also on the horizon. Previously tested earlier this year, this tool will now be available to all teens using the app. It works by automatically blurring images containing nudity and providing users with warnings and resources when such content is detected. This step aims to prevent the exploitation of intimate images and ensure that teens are better informed about the potential risks associated with sharing personal content.

Addressing the Reality of Sextortion Scams

The changes introduced by Instagram are directly aimed at addressing the disturbing trend of sextortion scams. In these schemes, scammers manipulate teenagers into sharing intimate photos, which are then used to threaten and extort them. A report published earlier this year by Thorn and the National Center for Missing & Exploited Children highlighted that Instagram, alongside Snapchat, is one of the most frequently used platforms for initiating such scams.

Scammers often operate in organized groups, some of which have been found to use Meta’s own platforms for coordination. In a bid to clamp down on these activities, Meta has taken action against numerous accounts associated with groups known to facilitate sextortion. The company recently reported the removal of over 800 groups from Facebook and around 820 accounts linked to a notorious group known as the Yahoo Boys, which was identified as attempting to recruit and train new sextortion scammers.

Meta’s Ongoing Pressure to Improve Teen Safety

These updates come at a critical time for Meta, as the company faces mounting legal challenges regarding its responsibility for the safety of its youngest users. A lawsuit involving more than 30 states is currently underway, focusing on allegations that Meta has failed to implement sufficient measures to protect minors from harassment and exploitation on its platforms. A recent ruling by a federal judge has also rejected Meta’s attempts to dismiss this lawsuit, further intensifying scrutiny on the company.

In addition to the ongoing legal battles, New Mexico has filed its own lawsuit against Meta, accusing the company of negligence in preventing adults from harassing teenagers on its apps, particularly Instagram. These legal pressures underline the urgency for Meta to enhance its safety features and ensure that it is taking appropriate action to protect vulnerable users.

New Features on Threads to Enhance User Engagement

While Meta focuses on teen safety in Instagram, it is also making adjustments to another platform, Threads. Recently, the company announced that it would introduce “activity status” indicators to enhance user engagement. This feature will display a green bubble next to a user’s profile picture when they are online, encouraging real-time interactions. The intention is to facilitate conversations among users who are currently active, making it easier for them to engage in timely discussions.

However, critics have pointed out that the introduction of activity status does not directly address the need for more real-time content within Threads. Despite the absence of direct messaging capabilities, Meta is positioning the activity status as a way to connect users more effectively, although it remains to be seen how this will be implemented in practice.

The Broader Context of Social Media Safety

As social media platforms grapple with safety concerns, the introduction of new features and tools is a critical aspect of their response to public and legal pressure. The steps taken by Instagram to prevent sextortion and improve user safety are reflective of a broader trend within the industry, where companies are increasingly aware of the risks that young users face online.

Meta’s proactive measures represent an important shift in how social media platforms address security concerns. As the landscape of online interaction evolves, so too must the tools and strategies used to protect users, particularly those who are more vulnerable. The updates to Instagram and Threads are just the beginning of what will likely be a long-term commitment to improving safety and fostering a healthier online environment.

Conclusion

In conclusion, Meta’s recent updates to Instagram and Threads reflect a growing awareness of the challenges associated with safeguarding young users on social media. By implementing features aimed at preventing sextortion and enhancing user engagement, Meta is attempting to respond to both legal pressures and public concerns about safety. As these platforms continue to evolve, it will be crucial for companies like Meta to prioritize user security, especially for their youngest audience. The ongoing commitment to creating a safer online environment is essential not only for the reputation of these platforms but also for the well-being of the users who rely on them.